After that brief hiatus, we return to the proof of Hardy’s theorem that the Riemann zeta function has infinitely many zeros on the real line; probably best to go and brush up on part one first.

Two things remain to complete the proof; to show that

is small and that

is large, for some suitably chosen kernel and all large

.

For no particular reason, we’ll deal with the latter part first. By our choice of we need to give a lower bound for

Of course, it’s very difficult to estimate this integral without first deciding what our choice of kernel is going to be. The reason we want to introduce the kernel is to help us estimate (1), by allowing us to control the cancellation. We can actually deal with the mean values of

quite well, so all we need from the kernel at the moment is that it won’t ruin things too much. In particular, because we want to show that (2) is large, it’s enough to know that

doesn’t decay too quickly, and then we can just use the trivial bound.

Making a (somewhat) arbitrary choice about what this means, all that we’ll assume about the kernel in this post is that it satisfies

so that we can bound (2) by

All that we need now is a bound such as:

Using the above and the triangle inequality we deduce that

which is good enough combined with the upcoming estimates for (1) to yield Hardy’s theorem.

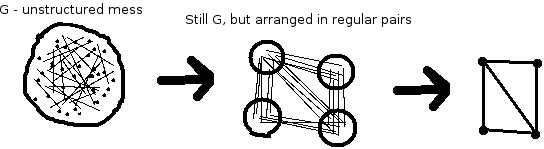

Great! All we need to do now is prove (3), a mean-value estimate for the zeta function. This is classical analytic number theory stuff, and there is a standard approach to dealing with integrals involving the Riemann zeta function: using Cauchy’s theorem move the path of integration across to the half-plane , picking up some acceptable error along the way, and then using the nice Dirichlet series for the zeta function in that half-plane to do things explicitly.

Let be the path from

to

and let

be the path from

to

. Since

only has a pole at

, and as long as

we certainly avoid that, it follows from Cauchy’s theorem that

Let’s first deal with the ‘error terms’ here. Since we’re happy with a lower bound of order we can dispatch these error terms using the trivial bound as long as we knew that

uniformly for , say. Of course, we need something about the zeta function beyond its Dirichlet series representation here, but we don’t need much, just the identity

valid for any and

(assuming

). This identity comes from the Dirichlet series for

using partial summation – see any text on analytic number theory for details.

We now bound everything very crudely for where

, giving

and we’re done by choosing . That’s the error terms dealt with, so it only remains to show that

Finally we’re on safe ground, since we’re only dealing with the zeta function in the half-plane , where we have a Dirichlet series and everything is rosy. So

where the term comes from the

summand and the other summands are bounded above by an absolute constant.

That’s it, we’re done. To recap, we’ve shown that

using only the assumption that and some quite crude information about the Riemann zeta function – all that one needs is knowledge of Cauchy’s theorem, the Dirichlet series representation for the zeta function and a dash of partial summation. We’ll need slightly more for the rest of the proof in the next post, but for now, Hardy’s theorem is not as formidable as it might seem!